Why Learning Docker Containers is so important in IT industry?

Ashish Pandey

Ashish Pandey

Do you know what was one of the main reason for the defeat of the USA in the Vietnam war?

It was due to the enormous size of the warships which could not reach to the narrow coast of Vietnam and hence war weapons could not be transported to the interior war zones.

Size matters but sometimes you need thousands of dinghies rather than one large ship.

This very important principle is at the core, that has enabled containers to change the deployment paradigm in IT industry.

Containers were always there since the inception of Unix but Docker (the company) has made it so easy and popular that the words Container and Docker are commonly being used interchangeably. Docker and container words are used just like we use Google and search or Xerox and photocopy.

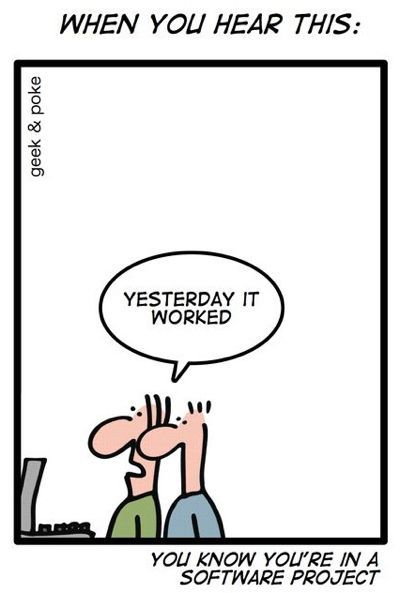

For non-starters, Containerization/Dockerization is a way of packaging your application so that it does not matter in which environment it is being deployed. If a Dockerized application is running on a dev-test machine the same can be executed on production systems as well. It provides necessary isolation from the system and other applications running on the same systems. Containers provide isolation from the system along with using the underlying system resources (CPU, memory, networking, storage) and sharing those resources with other containers.

By using Docker you can run hundreds of applications independently of different types, using the different set of dependency packages, on the same host machine.

Another advantage of using containers is bringing effective Dev/prod parity. In a container ecosystem, the same encapsulated application image is used in the dev, test, staging as well as the production environment. This minimizes the post-release surprises from the production environment, bugs are detected quite early and ultimately saves a lot of cost and effort.

Before Docker, we needed to launch applications in separate Virtual Machines(VM) to achieve this kind of isolation, but we know VMs, by-design, are very resource-intensive. VMs bring with them, full-blown host OS and behave poorly in sharing resources such as libraries, binaries or even storage spaces. Dockers, on the other hand, are very light-weight. Sharing the resources with the host machine and with other containers is at the heart of the way Docker is designed.

Since Dockers are very lightweight, it is common to launch, delete and modify Docker containers within seconds. The only extra requirement in case of Docker is running a Docker daemon. Installing and running a docker service is again just a matter of few commands.

So not only in terms of resources but also the ease with which Docker containers can be created is awesome. Being an open source tool, Docker has its own advantage in adaptability.

It was first launched in 2013 and today Docker company is already a Unicorn with valuations crossing $1.3 Billion. Docker is not the only container-provider there are others like rkt, mesos, Lxd and many others.

On a normal Amazon Linux ec2, installing and starting Docker is just a two-step process:

$ sudo yum install docker

$ service docker start

To show you the simplicity of Docker lets take an example where you have to create an Ubuntu environment for some dev-test activity. Once your Docker daemon is up and running, to launch a Docker container running Ubuntu is just one command away:

$ docker run -i -t ubuntu /bin/bash

This command will :

- Search the ubuntu docker image locally on your host

- If not found in above step, search an ubuntu image in an image repository freely hosted on www.hub.docker.com

- Pull the image to your host machine

- Use the image to launch an Ubuntu container (Almost equivalent to Ubuntu VM)

- Creates a Bash shell inside the ubuntu

- Assigns you to the new shell created inside the container.

All of the above steps happen within minutes, most of the time taken is in downloading the image to your host. If the image is locally available all those steps just take less than a second.

By now you must have imagined the utility of the docker for your own organization. Few of these are:

- Provides isolation to your application

- Helps automate the deployment process

- Brings Dev/Prod parity

- Saves the infrastructure cost

- Ease of scalability

- … and many more

Container technology has already been adopted by a large number of companies including Google, Cisco, Expedia, Paypal are using containers extensively. In its official testimony, Paypal claims a 50% increase in productivity due to use of docker. VISA claims to achieve 10x scalability owing to the use of Docker.

According to Datadog, from a sample size of more than 10,000 companies, 25% of the companies have already adopted container technology and running around 700 million containers in real-world use.

The new breed of machine learning and data science engineers are increasingly using and sharing Dockerfiles and Docker images rather than just the application code.

Looking at the growth of the containers it is high time to adopt containers before the ship sails.

Where to start?

Take a look at This Docker course that we have curated, to start from scratch and bring you to an expert level on Docker.

References:

Datadog's report on Growth of Docker

Latest Valuation of Docker Inc.

Keywords : DevOps cloud docker containers kubernetes deployment

Recommended Reading

How to create a Docker Container Registry

Running a Local Docker Registry One of the most frequently asked question by developers is, how to set up a container registry for storing the Docker images?

How to install Kubernetes Cluster on AWS EC2 instances

When someone begins learning Kubernetes, the first challenge is to setup the kubernetes cluster. Most of the online tutorials take help of virtual boxes and minikubes, which are good to begin with but have a lot of limitations. This article will guide you t...

Container is the new process and Kubernetes is the new Unix.

Once a microservice is deployed in a container it shall be scheduled, scaled and managed independently. But when you are talking about hundreds of microservices doing that manually would be inefficient. Welcome Kubernetes, for doing container orchestration ...

zekeLabs among Top 10 destinations to learn AI & Machine Learning

Artificial Intelligence course from zekeLabs has been featured as one of the Top-10 AI courses in a recent study by Analytics India Magazine in 2018. Here is what makes our courses one of the best in the industry.

What are Big Data, Hadoop & Spark ? What is the relationship among them ?

Big Data is a problem statement & what it means is the size of data under process has grown to 100's of petabytes ( 1 PB = 1000TB ). Yahoo mail generates some 40-50 PB of data every day. Yahoo has to read that 40-50 PB of data & filter out spans. E-commerce...